This project is part C02 of the CRC 1646 “Linguistic Creativity in Communication”. It investigates how speakers make creative use of their verbal and gestural resources in communicatively challenging situations. We combine psycholinguistics experiments with A.I.-based, computational cognitive modeling to develop a model of speech-gesture creativity and create simulations of robust, effective multimodal speakers.

More

Generating dialog-based analogical explanations about everyday tasks This project is part of the SAIL network (Sustainable Life-Cycle of Intelligent Socio-Technical Systems) funded by the Ministry of Culture and Science of the State of NRW. Within SAIL we contribute to the research theme R1: “Human agency to shape cooperative intelligence”. Various tasks and domains exist where […]

More

HiAvA investigates and develops technologies for enabling multi-user applications in Social VR, mitigating the challenges of social distancing. The goal is to improve upon current solutions by maintaining immersion and social presence even on hardware devices that only allow for limited tracking or rendering. The resulting system should exceed the capabilities of current video communication […]

More

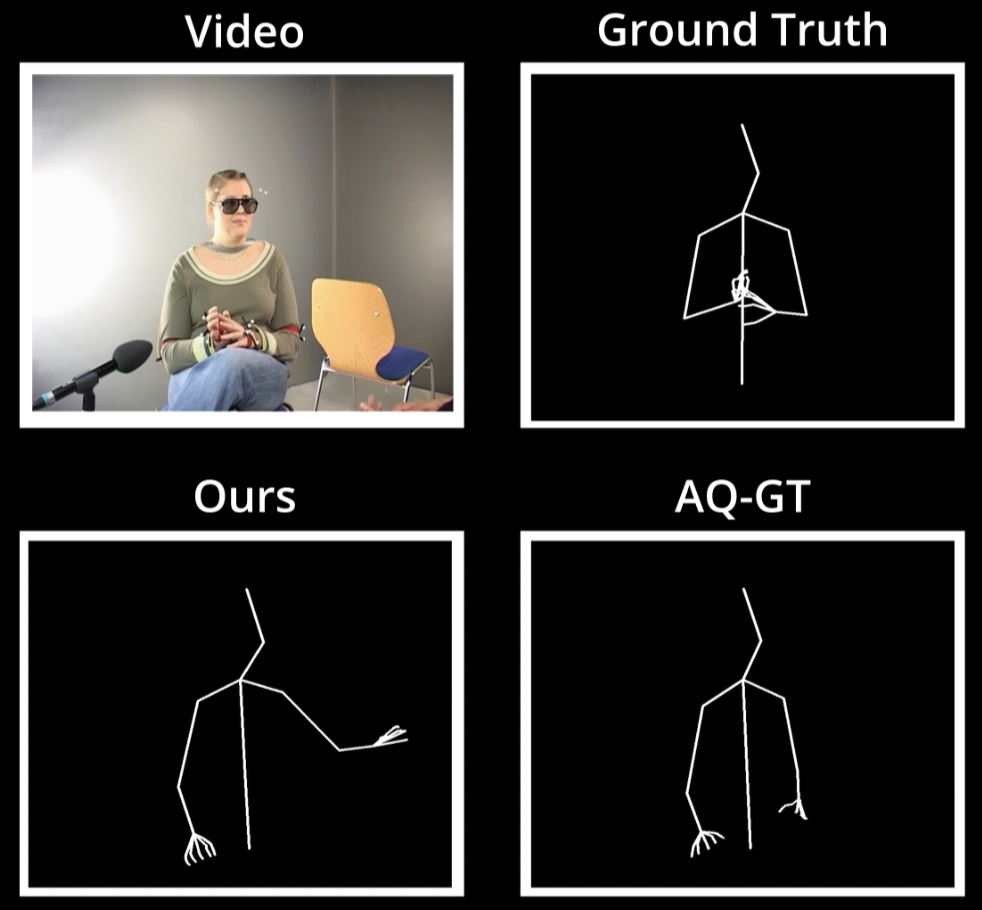

A key challenge for interactive artificial agents is to produce communicative multimodal behavior that is communicatively effective and robust in a given, dynamically evolving interaction context. This project investigates the automatic generation of speech and gesture. We develop cognitive, generative models that incorporate information about the realtime interaction context to allow for adaptive multimodal behavior that can steer […]

More

The Transregional Collaborative Research Center (TRR 318) „Constructing Explainability“ investigates how explanations of algorithmic decisions can be jointly constructed by the explainer and the explainee. The project C05 investigates how human decision makers and intelligent systems can collaboratively explore a decision problem to make a decision that is accountable and hence explainable. The goal is […]

More

Adaptive Explanation Generation

2021-2025The Transregional Collaborative Research Center (TRR 318) „Constructing Explainability“ investigates how explanations of algorithmic decisions can be made more efficient by constructing them jointly by the explainer and the explainee. The project A01 “Adaptive explanation generation” investigates the cognitive and interactive mechanisms of adaptive explanations. The goal of our work is to develop a dynamic, […]

More

This projects explores how AI-based agents can be equipped with an ability to cooperate grounded in a Theory of Mind, i.e. attribution of hidden mental states to other agents inferred from their observable behavior. In contrast to the usual approach to study this capability in offline, observer-based settings, we aim to fuse mentalizing with strategic […]

More