Autonomous systems using artificial intelligence (AI) to communicate with humans will soon be a part of everyday life. The increasing availability and deployment of such systems can have implications for humans and society at different levels. This project studies those implications with regard to users’ (1) understanding of AI algorithms, (2) communication with machines, and […]

More

This project is part of the Forschungskolleg “Design of flexible working environments – human-centered use of Cyber-Physical Systems in industry 4.0” run by the Universities of Paderborn and Bielefeld. We investigate how to develop learning, intelligent assistance systems for industrial workers that adapt their level of assistance and autonomy to the internal state of the […]

More

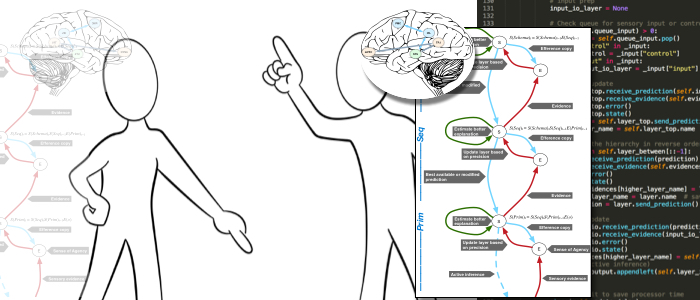

Computational cognitive modeling of the predictive active self in situated action (COMPAS)

2019-2025The COMPAS project aims to develop a computational cognitive model of the execution and control of situated action in an embodied cognitive architecture that allows for (1) a detailed explanation, in computational terms, of the mechanisms and processes underlying the sense of agency; (2) simulation of situated actions along with the subjectively perceived sense of […]

More

The VIVA project aims to build a mobile social robot that produces lively and socially-appropriate behavior in interaction. We are in charge of developing an embodied communication architecture that controls and mediates the robot’s responsive behavior. In addition, we endow the robot with abilities for cohesive spoken dialogue over long-term interactions.

More

Mental models in collaborative interactive reinforcement learning

2018-2020This project is part of the Research Cluster CINEMENTAS (“Collaborative Intelligence Based on Mental Models of Assistive Systems”) funded by a major international technology company. We investigate the role of mental models for interactive reinforcement learning, according to which learning is seen as a dynamic collaborative process in which the trainer and learner together trying to […]

More

This projects aims to provide a detailed account of the development of iconic gesturing and its integration with speech in different communicative genres. We will study pre-school children at 4 to 5 years of age to investigate their speech-accompanying iconic gesture use and to develop a computational cognitive model of their development. We apply qualitative and […]

More

KOMPASS develops a virtual assistant to support people with special needs in everyday situations, e.g. keeping daily schedules or using video communication. The focus is robust and intuitive dialogue through a better understanding of and adaptation to the mental and emotional states of the user.

BabyRobot aims to develop robots that can support the development of socio-affective, communication and collaboration skills in both typically developing and autistic spectrum children. The goal of the project is to enable robots to share attention, establish common ground and form joint goals with children. We will contribute research on nonverbal gesture-based interaction in relation to shared attention, interpersonal alignment and grounding. Specifically, we develop modules for recognition and synthesis of expressive social signals and gestures on social robot platforms.

L2TOR (pronounced ‘el tutor’) aims to design a child-friendly tutor robot that can be used to support teaching preschool children a second language (L2) by interacting with children in their social and referential world. The project will focus on teaching English to native speakers of Dutch, German and Turkish, and teaching Dutch and German as L2 to immigrant children. We contribute research on interaction management tasks based on decision-theoretic dialogue modeling, probabilistic mental state attribution and incremental grounding.

Social Motorics

2015-2018This projects explores the role of perceptual and motor processes in live social interaction. We are developing a probabilistic model of how prediction-based action observation and mentalizing (i.e. theory of mind) contribute and work together during dynamically unfolding gestural interactions.

More

Underlying mechanisms of the sense of agency (SoA)

2017-2018This project investigates the underlying mechanisms of the sense of agency, i.e. the sense that one is in control of their actions and the consequences of these actions. We employ behavioral methodologies established in both physical and virtual reality environments, and also examine how sense of ownership and sense of agency alter in joint actions with robots and virtual agents.

More

This sub-project of KogniHome looks to integrate a conversational assistant into a smart home environment. In particular, as a joint project with the “Neue Westfälische” newspaper publishing group, we explore new ways to access news media through a collaborative recommender agent, which learns how to interact and collaborate with different users by way of adaptive spoken dialogue capabilities.

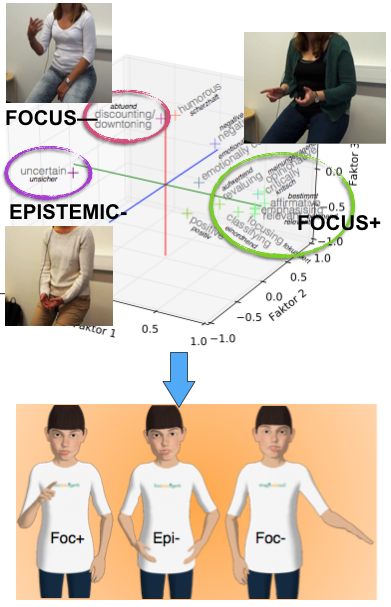

An integral part of natural conversations among humans is that speakers add (indirect) cues to the intended interpretation of their utterances. One particularly interesting phenomenon of such meta-communication is gestural modification, when humans apply non-verbal signals such as gestures and body language in order to enrich the semantic content of their utterances for the listener.

Intelligent Coaching Space (ICSPACE)

2013-2018ICSPACE explores how to use Virtual Reality (VR) to provide intelligent coaching for sports training, motor skill learning, or physical rehabilitation. We develop a closed-loop intelligent coaching environment that enables online support through multi-modal, multi-sensory feedback delivered by augmented virtual mirrors or action-oriented natural language of a virtual coach.

This sub-project in the technology network “it’s OWL” builds the technological basis of intuitive human-machine interfaces for usable intelligent technical systems in industrial settings (e.g. manufacturing robots able to learn and collaborate). Additionally, we support companies and partners through knowledge transfer and the development of tailored solutions for their practical applications.

FlüGe - Opportunities and challenges of global refugee migration for health care in Germany

2016-2018The project “FlüGe” studies the health of refugees and aims to overcome barriers that keep refugees from using health care services. We contribute by developing and implementing an eHealth companion app for refugees to navigate the German health care system and to enable easier monitoring of health.

More

Speech-Gesture Alignment

2006-2015This project investigated the cognitive mechanisms that underlie the production of multimodal utterances in dialogue. In such utterances, words and gestures are tightly coordinated with respect to their semantics, their form, the manner in which they are performed, their temporal arrangement, and their joint organization in a phrasal structure of utterance. We studied in particular, both empirically and with computational simulation, how multimodal meaning is composed and mapped onto verbal and gestural iconic forms and how these processes interact both within and across both modalities to form a coherent multimodal delivery.

This transfer project developed a human-guided optimization system for logistics planning, based on analysis of an actual logistics process of a mid-sized company. The system allows for interactively exploring, manipulating, and constraining possible solutions in practical work environments at runtime. Since then it is being applied by our industrial partner.

In cooperation with the v. Bodelschwingh Foundation Bethel, we investigated whether spoken-dialogue based interaction with a virtual assistant is feasible for elderly or cognitively handicapped users. In particular we studied how different dialogue grounding strategies of the system affect the effectiveness and acceptance of the assistant.

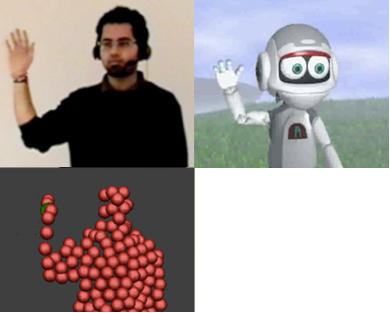

This project explored how motor cognition mechanisms for mirroring and mental simulation are employed in social interaction. We develop a hierarchical probabilistic model of motor knowledge that is used to generate communicative gestures and recognize them in others. This allowed our virtual robot VINCE to learn, perceive and produce meaningful gestures incrementally and robustly online in interaction.

Within the working group 6 “Language and other Modalities” of the BMBF-funded project CLARIN-D “Research Infrastructure for Digital Humanities” we developed methodologies for building multimodal corpora. This included the editing and integration of multimodal resources into CLARIN-D and the preparation of a web-service tool chain for multimodal data. We applied this to the Speech and Gesture Alignment Corpus (Bielefeld University), Dicta-Sign Corpus of German Sign Language (Hamburg University) and Natural Media Motion Capture-Data (RWTH Aachen University).

Conceptual Motorics (CoR-Lab; Honda Research Europe)

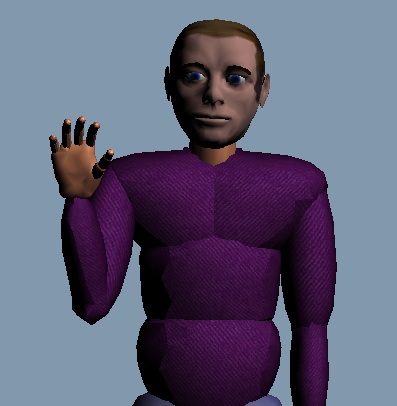

2008-2012Enabled the humanoid robot ASIMO to produce synthetic speech along with expressive hand gesture, e.g., to point to objects or to illustrate actions currently discussed, without being limited to a predefined repertoire of motor actions. A series of experiments gave new insights into human perception of gestural machine behaviors and how to use these in designing artificial communicators. We found that humans attend to a robot’s gestures, are affected by incongruent speech-gesture combinations, and socially prefer robots that occasionally produce imperfect gesturing.

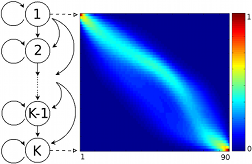

This project explored how systems can learn multivariate sequences of interaction data in highly dynamic environments. We developed models for unsupervised and reinforcement learning of hierarchical structure from sequential data (Ordered Means Models), which not only afford the analysis of sequences but also generation of learned patterns. This was demonstrated, e.g., in enabling the agent VINCE to play, and always win the rock-paper-scissors game.

ADECO – Adaptive Embodied Communication (CITEC)

2008-2010Instructions about sequences of actions are better memorized when offered with appropriate gestures. In this project, the user’s memory representations during learning a complex action from speech-gesture instructions was assessed using the SDM-A method. A virtual character was then used to give instructions with accordingly self-generated gestures.

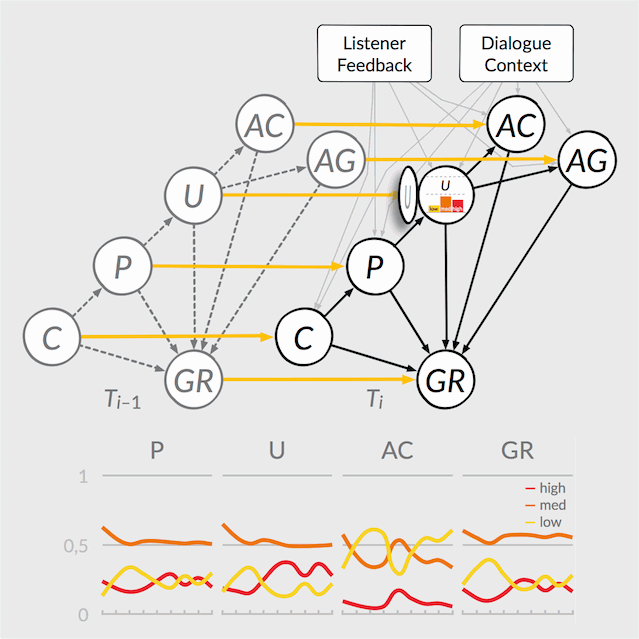

Smooth and trouble-free interaction in dialogue is only possible if interlocutors coordinate their actions with each other. In this project we develop conversational agents capable of two important coordination devices that are commonly found in human-human interaction: feedback and adaptation.