Our paper “Giving AI agents a sense of control facilitates reinforcement learning in multitasking scenarios” with Annika as first author is now out and publicly available in PLOS One.

Category Archives: News

Workshop “Multimodal creativity in speech and gesture production” at ZiF

Join us at the ZiF in Bielefeld for a workshop on “Multimodal creativity in speech and gesture production” on Dec 1st and 2nd! We are looking forward to talks by James Trujillo (U of Amsterdam), Angela Grimminger & Luyao Wang (Paderborn U), Andy Lücking (Goethe U Frankfurt), Jiahao Yang (U of Bath), and Anna Palmann (U of Amsterdam), in addition to own Lisa Gottschalk, Alon Fishman and Lotta Heidemann (Bielefeld U). The event is organized by subproject C02 of CRC 1646.

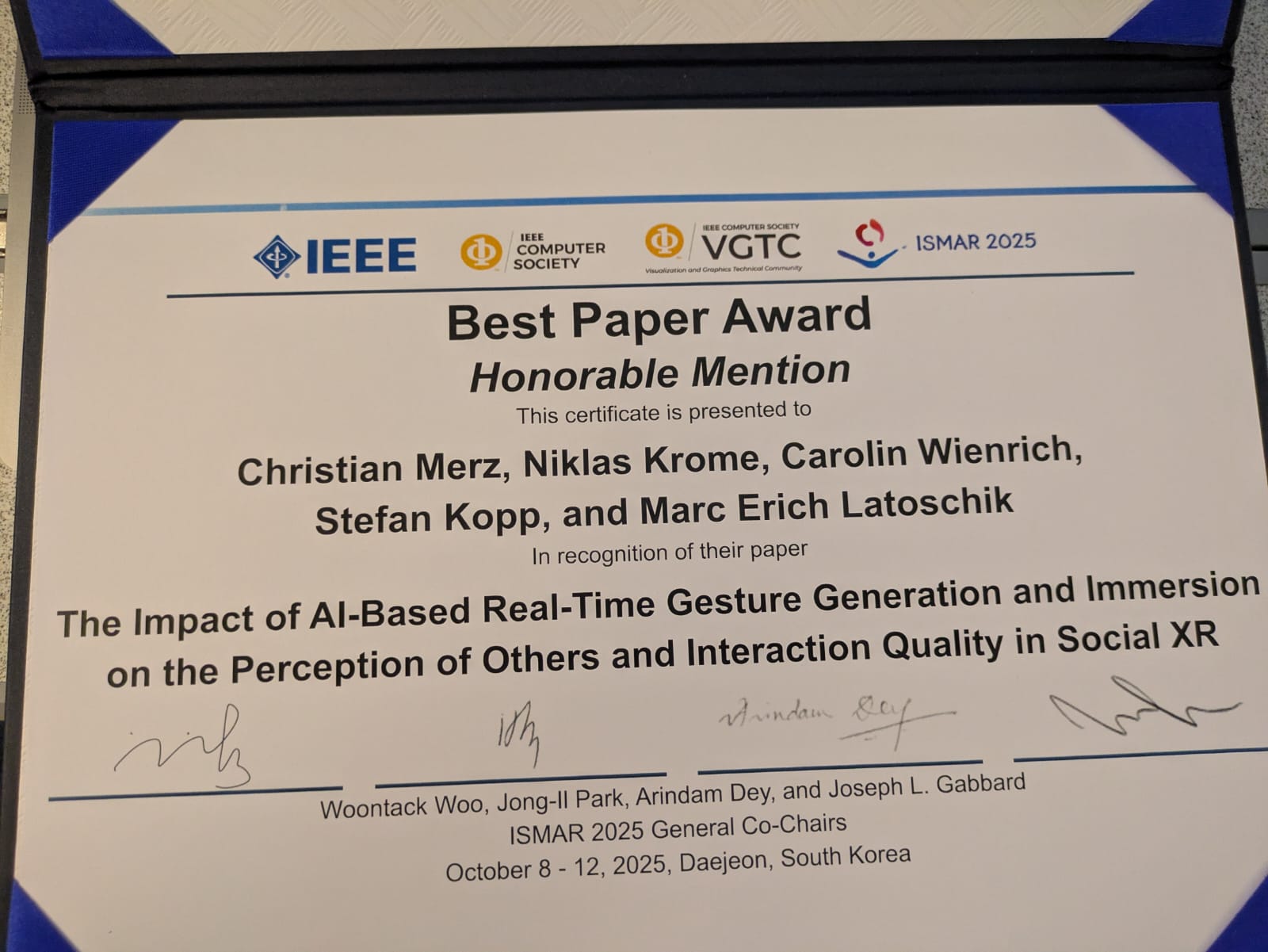

Best Paper Award Honorable Mention at IEEE ISMAR 2025

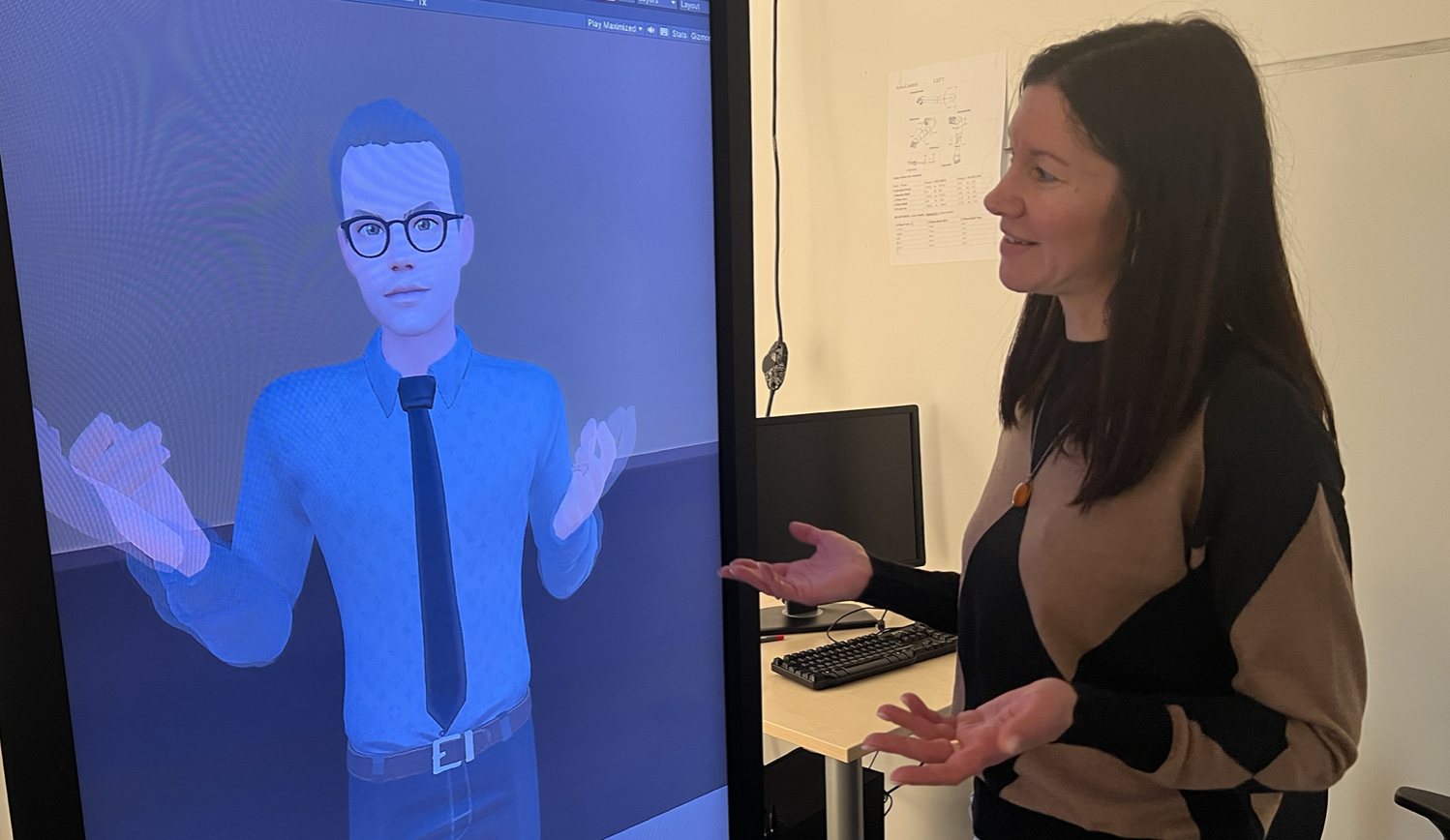

Our joint paper with colleagues from University of Würzburg on “The Impact of AI-Based Gesture Generation and Immersion on the Perception of Others and Interaction Quality in Social XR” received a Best Paper Award Honorable Mention at the 24th IEEE International Symposium on Mixed and Augmented Reality (ISMAR 2025) in Daejeon, South Korea.

Participating in Wallenberg Advanced Scientific Forum 2025

Excited to go to beautiful Rånäs Castle near Stockholm to participate in the Wallenberg Advanced Scientific Forum 2025 and to discuss with other experts how thorough and rigorous evaluation can be (and must) be a driver for generative AI.

Dialogue Researchers met at SemDial 2025 in Bielefeld

We organized, together with Hendrik Buschmeier and Sina Zarrieß, the 29th Workshop on the Semantics and Pragmatics of Dialogue (SemDial), this year called “Bialogue”. More than 50 researchers from Computer Science, Linguistics, Psychology and other disciplines met at CITEC to discuss latest findings and advancements in conversational interaction, dialogue and language technology.

Presentations at German Cognitive Science Conference (KogWis 2025)

Amelie and Dominik gave oral presentations at the German Cognitive Science Conference (KogWis 2025), held in Bochum. Presenting work carried out in TRR 318 (in subproject A01 and C05, respectively), Amelie talked about using cognitive partner models for adaptive explanation generation, while Dominik described how the, possibly biased, diagnostic reasoning of medical experts can be modeled computationally.

Speaking at RO-MAN 2025

Stefan gave a keynote at the Workshop on Interactive Task Learning in Human-Robot Co-Construction (ITL4HRI) at IEEE RO-MAN in Eindhoven (NL), speaking about the cognitive and communicative mechanisms of co-constructive task learning in human-robot interaction.

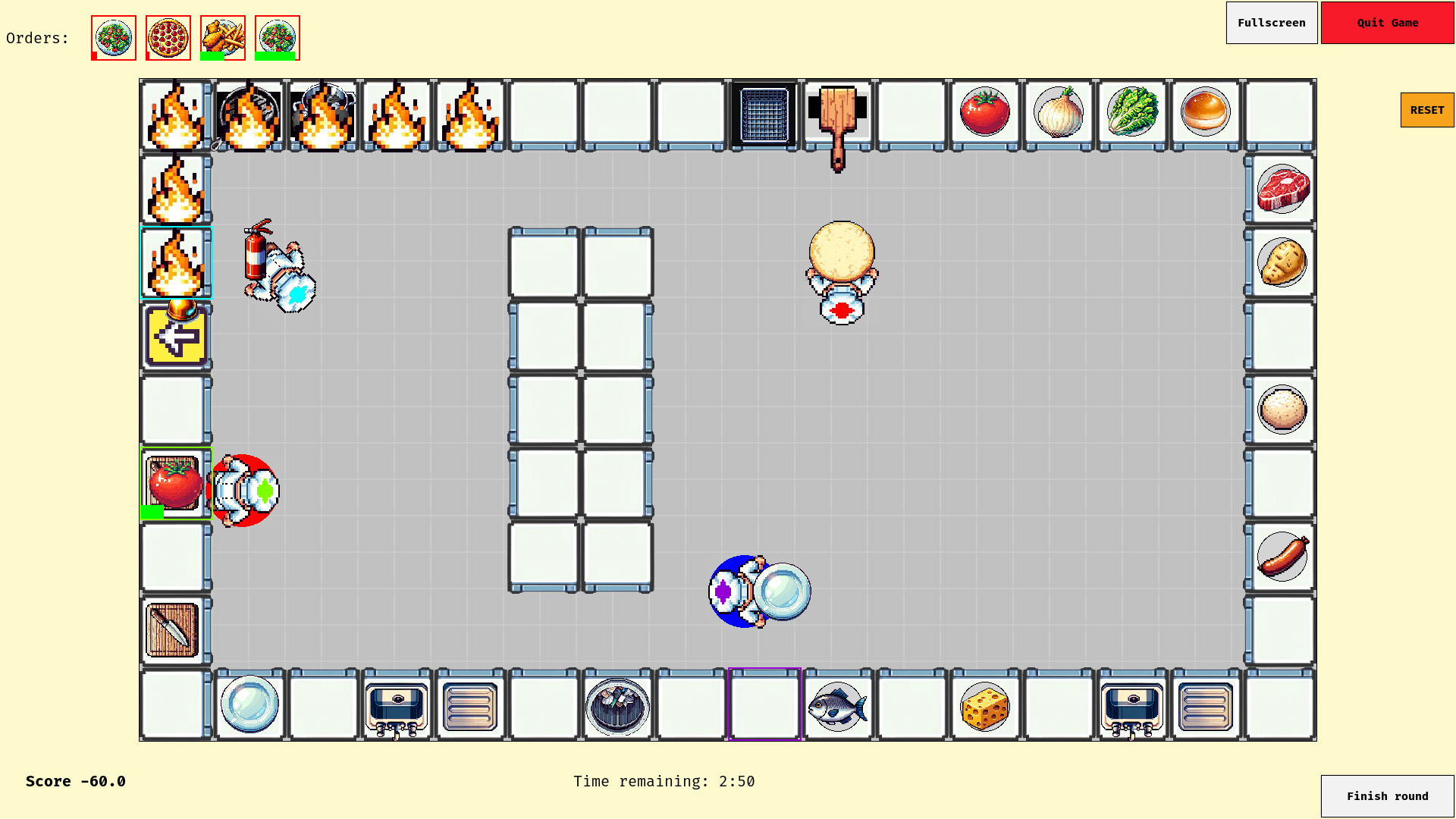

Paper on fluid human-agent collaboration out now!

Our comprehensive paper on how to make human-agent collaboration in dynamically changing environments sufficiently flexible and adaptive (i.e., fluid) just got published in Frontiers in Robotics & AI. We describe dynamic collaboration patterns observed in humans, discuss how those can be transferred to human-agent teams, and describe what models of theory of mind reasoning must and can be implemented in AI agents to that end.

Making Better, More Explainable Medical Diagnoses with AI

Can artificial intelligence work side by side with doctors to help them make better diagnoses? An article features our research dedicated to answering this question. It explains how we are working on an interactive AI system that accompanies doctors as they make a medical diagnosis by reviewing and evaluating assumptions in dialog with them.

Presenting fluid human-agent collaboration at HHAI 2025

Florian went to the Int. Conference on Hybrid Human-AI Interaction (HHAI 2025), held in Pisa (Italy) and presented work on collaborative AI agents that combine task intelligence (perception, planning, execution) with socio-cooperative intelligence (theory of mind, multi-agent collaboration, language-based communication) to enable fluid human-AI teams.