Comparing Human Motor Learning to Reinforcement Learning approaches

Suitable for:

Student, Bachelor or Master projectDescription:

Reinforcement Learning (RL) has shown great promise in enabling robots to learn complex tasks through trial and error. However, RL often requires a large number of interactions with the environment. Human motor learning, on the other hand, is remarkably efficient, allowing humans to acquire new skills with very few trials. One reason is the missing directional feedback in RL: even if a dense reward is provided (e.g. distance to target), it just provides a scalar feedback signal, whereas humans can exploit the directional motion feedback to learn more efficiently [1].

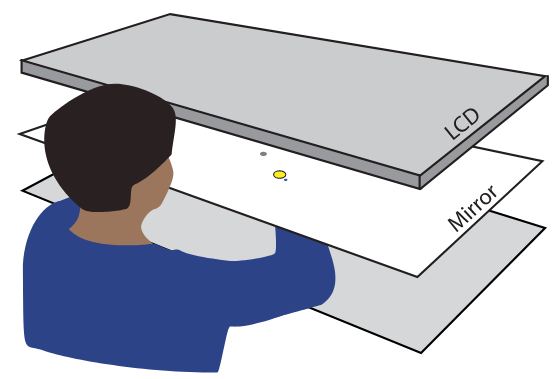

In this thesis, you should compare human and artificial motor learning on various simple 2D reaching tasks. The basic idea is to modify the usual interpretation of mouse or joystick actions, e.g., mirroring or rotating the cursor motion [3]. This poses a new challenging motor learning task to the human, which is comparable in complexity for an artificial agent. The goal is to compare human performance on such tasks to that of state-of-the-art RL algorithms, e.g. PPO [2] or MB-DPG [1]. The latter was proposed to more closely mimic human motor learning.

In contrast to the experiments in [3], which considered fast hand motions, the focus here should be on slow servoing tasks. How does this affect the outcomes? Does it allow for faster learning?

Key research questions

• Do humans and agents use similar or different learning strategies?

• What is the effect of various task parameters (linear vs. non-linear distortions, noise, …)?

• Can the task be performed without visual feedback after a while? How does accuracy change?

Tasks

• Prepare and implement a framework to easily devise and run new 2D motor learning tasks

• Implement 1-2 RL approaches to solve these tasks

• Run studies with human users and artificial agents

• Compare and evaluate the results

• for a master thesis: apply the findings to a real robot visual servoing task