Learning Visual/Tactile Servoing Skills

Suitable for:

Student, Bachelor or Master projectDescription:

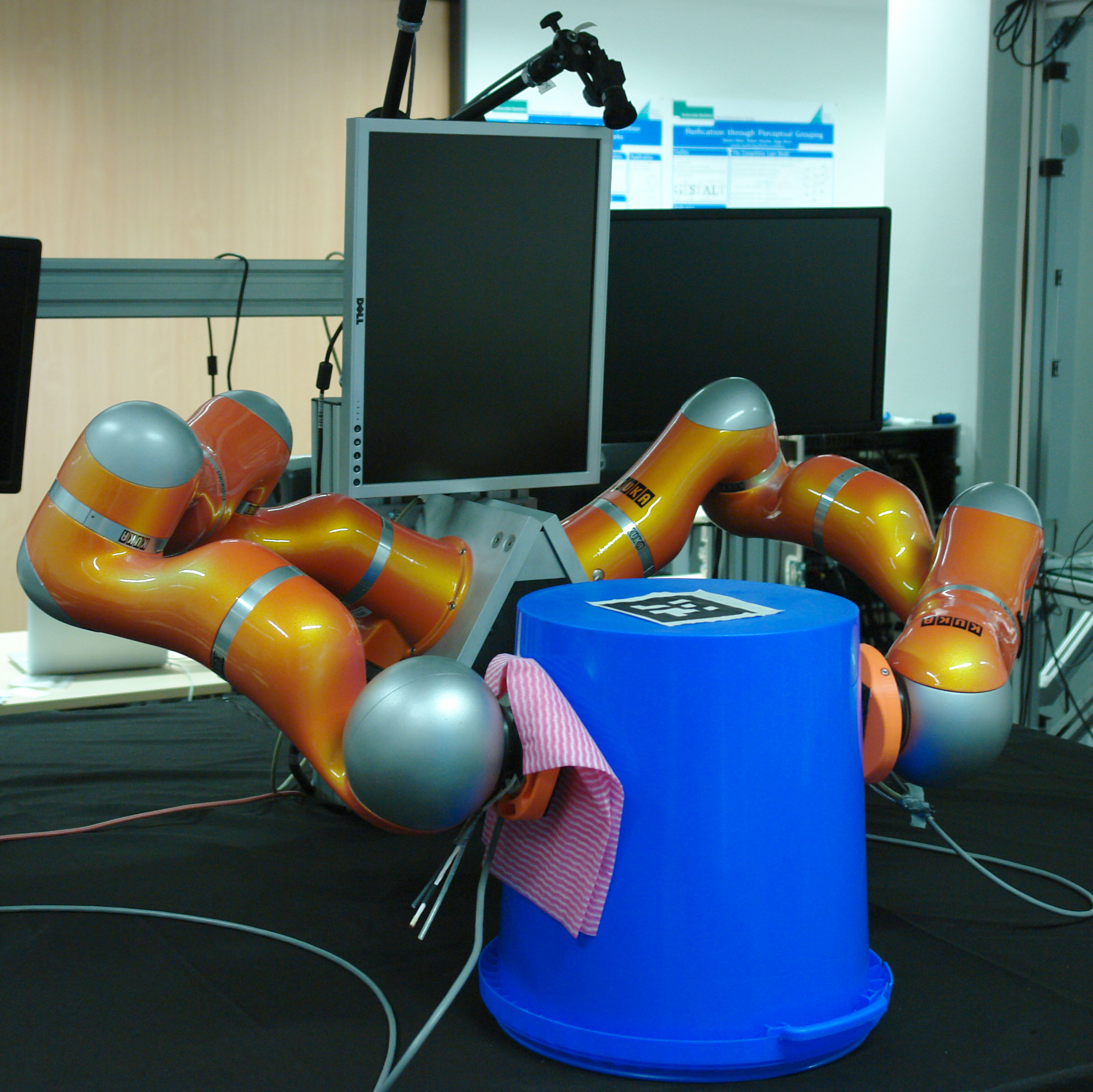

A key capability of robots is to servo their end-effector into a desired configuration with the environment. Classically, this is achieved via offline planning and open-loop execution of planned trajectories. Obviously, this approach fails in the presence of uncertainties, e.g. inaccurate kinematic models, environment perception errors, or unmodeled environment dynamics. To overcome these limitations, visual and tactile servoing approaches have been proposed [1, 2, 3], which close the control loop via visual or tactile feedback.

Traditionally, visual and tactile features were manually designed. Within this thesis, you should implement and evaluate modern deep-learning based approaches to learn suitable features. Methodologically, various options are available:

- Via self-supervised learning, local interaction Jacobians could be learned. A key research question is to find a suitable feature representation, which is robust, task-specific, interpretable, and ideally linear w.r.t. control.

- Many recent approaches use Deep Reinforcement Learning (DRL) to learn a policy in an end-to-end fashion. While quite successful, these methods are very data-hungry and do not generalize.

- Alternatively, RL could be used to efficiently explore the feature-action manifold, which is learned via local linear models in an efficient fashion [4]. The reward function should focus on controllability/predictability of action outcomes, while exploration should ensure coverage of the manifold.

References

- F. Chaumette, S. Hutchinson, and P. Corke, “Visual Servoing,” in Handbook of Robotics, 2nd ed., Springer, 2016.

- R. Haschke, “Grasping and manipulation of unknown objects based on visual and tactile feedback,” in Motion and Operation Planning of Robotic Systems, ser. Mechanisms and Machine Science. Springer, 2015.

- Q. Li, R. Haschke, and H. Ritter, “A visuo-tactile control framework for manipulation and exploration of unknown objects,” in International Conference on Humanoid Robots, 2015.

- M. Garibbo, C. Ludwig, N. Lepora, and L. Aitchison. What deep reinforcement learning tells us about human motor learning and vice-versa.