Developing a Testing Suite for a Dialogue Management System

Suitable for:

Student or Bachelor projectDescription:

In this project, you will develop an automated testing suite for the dialogue management system Flexdiam that is used by our department.

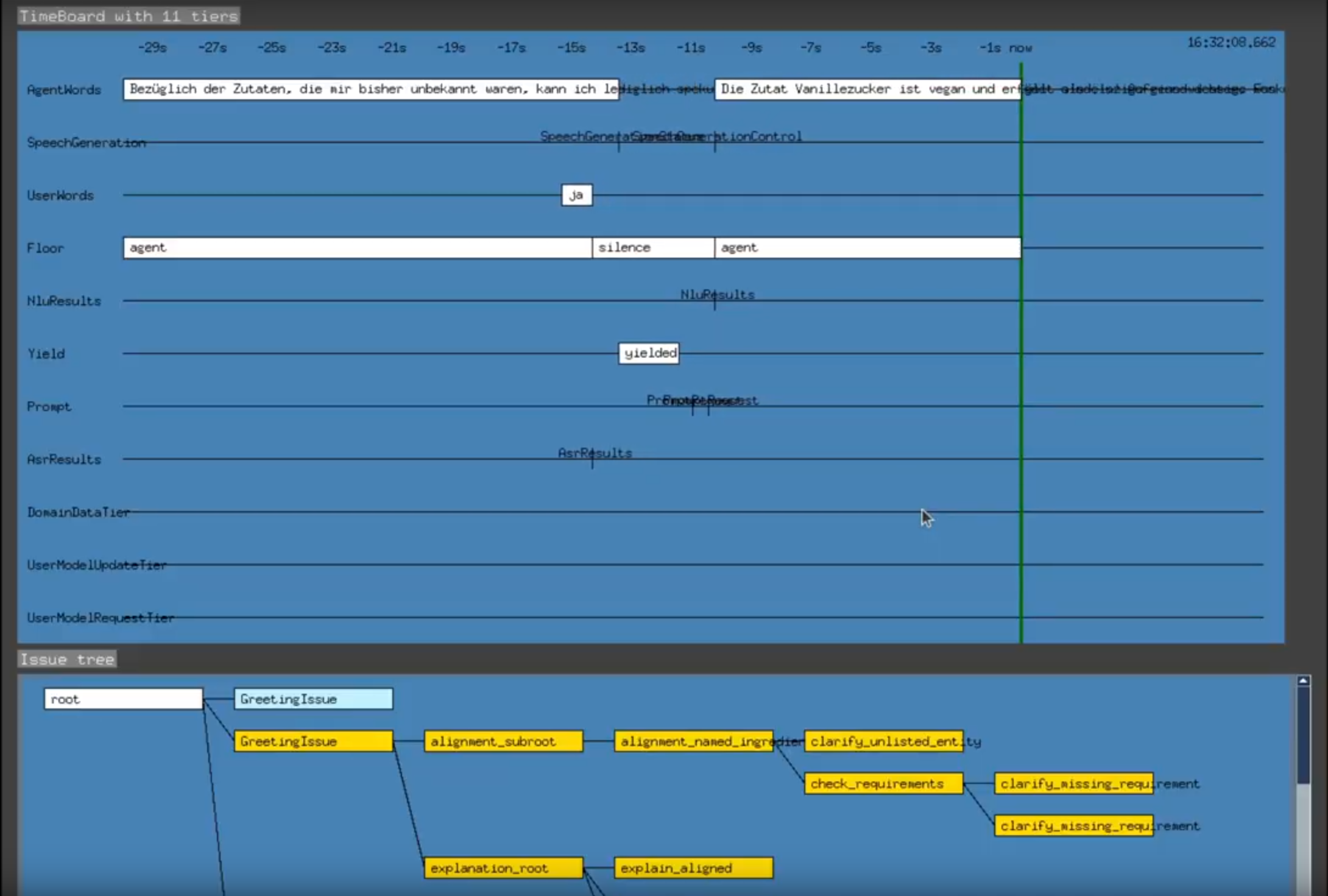

Flexdiam is integrated into a classic dialogue system pipeline where it receives input from a natural language understanding module about the current user utterance, does dialogue planning based on this input and the current interaction state, and outputs the results of this planning (e.g., what to say and how to say it) to a natural language generation module in order to produce a response. Internally, Flexdiam models dialogue as a tree where branches represent different topics under discussion or cohesive dialogue blocks (e.g., for a virtual flight booking assistant, greeting and responding to user query about a flight would be different branches of the same dialogue tree). These branches model different paths a dialogue could take, for example, depending on whether the user says “yes” or “no”.

Going through all these branches of dialogue manually while testing is very time-consuming. Therefore, we would like to have an automated testing suite which operates on files that define specific dialogue scenarios by listing the user utterances to input at every dialogue turn along with various types of expected outputs at different stages of processing. Several problems should be solved during the project, for example, if a non-deterministic language generation module such as a large language model is used, a simple comparison of expected to actual output will not be enough, so a score would need to be computed that would show how close the generated sentence is to the suggested expected output.

Requirements for the project:

– Programming experience in Python

– Interest in dialogue systems and dialogue systems evaluation